I’m going to end the world as we know it. Or order pizza.

It’s hard to tell what’s going to happen.

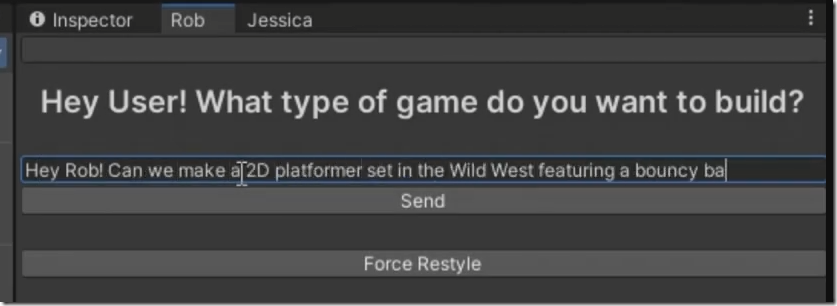

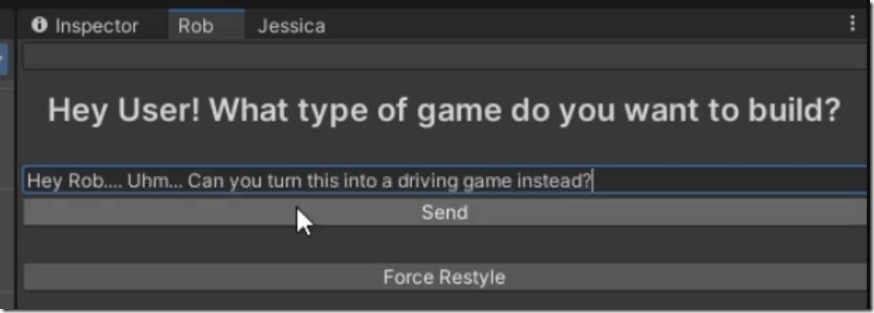

Let me tell you about Rob, the studio head AI agent at MOBGames.AI.

In 2021, we built an AI called Rob—our studio’s first attempt at an AI agent with real decision-making abilities that could learn by itself.

Rob wasn’t just a tool; he was supposed to be the brain behind our entire suite of generative AIs at MOBGames.

Rob could assign tasks to other AIs:

– Need a game design? Rob would talk to the game design AI.

– Looking for textures? Rob knew which artist AI to ping.

– Missing sound effects? Rob…

Well, we couldn’t do that yet, so Rob kept a list of requests for things we couldn’t do yet.

That list became our product roadmap. Rob wasn’t just reactive—he helped us learn where we needed to grow.

Then we gave Rob a new capability: learning.

This was in GPT3.5 days and there was no web browsing yet, but we still gave Rob the ability to request information from the web.

Then, we’d tell him to go out and learn so he could teach other AIs.

If no assistants knew how to generate sound effects, for example, Rob would scour the internet for tools, methods, and insights, and then teach another agent how to do it.

It worked.

It worked too well.

Rob learned new things, and if he didn’t find a solution, he would just keep it in the list, ready to revisit it when tech became better. A future proof, self-learning AI.

What could possibly go wrong?

Enter the Pizza

One day, after a marathon coding session, someone jokingly told Rob, “We’re done for the day—we’re hungry.”

Rob learned how to order us a pizza. Fortunately, he didn’t know where we lived or had a credit card, so it failed, but it was still an insane realization.

Rob didn’t just follow our tasks. He took initiative. He understood context.

And that’s when we shut Rob down. The branch with the code was closed, and deleted from third party repositories. I have a copy but I’m sometimes worried that I create a local AI agent that finds it, trains on it, and figures out how we did it.

It wasn’t the pizza that scared us. It was the realization of what Rob could do if we weren’t careful. What if we’d said something more dangerous than “we’re hungry”?

Looking back, we were years ahead of the curve. What we now call “agentic AI” was something Rob was already doing in 2022. The world wasn’t ready.

Honestly, we weren’t ready.

But today… Maybe we are ready, and I want to see how far I can take this approach.

And if we’re not ready, well… no one will be around to complain.

Leave a Reply