OK, if you made it here and you don’t know what prompt engineering is, then… Welcome!

In this article, and this series, I will introduce you to prompt engineering, explaining what it is, and hopefully also relaying the issues with it.

This article introduces prompt engineering by explaining more about how language models work and then shows you the first problem with prompt engineering.

First of all, let me start by saying that I don’t believe in defining things. I am not going to define what prompt engineering is because then the point becomes fitting my arguments into my definition. Those that have a different definition might disagree based on the definition, not the argument.

However, the bare minimum you need to know is that prompt engineering is about turning language model prompts into desirable results, and frankly that’s enough to get us started.

You see, we have already reached the very essence of the conclusion of this series, namely that we have some input, a prompt, and want some output, the text, image, video, or anything else a language model can produce.

Normally, prompt engineers will attempt to align the output with a desired results by tweaking the input. As it turns out, that’s not really a good idea.

To understand why this is the case, we need to look at some fundamentals of how language models work and to do that, we need to start much earlier than when we have a chat window open in front of us.

How Large Language Models Work

Let’s start with something simple. Let’s count odd number from 2 to 8 and see what happens.

2, 4, 6, 8

Easy, right?

Now… What do you think comes next?

Chances are, you are going to answer 10. That makes sense because in most situations where 2, 4, 6, and 8 comes in that order, the next thing is going to be 10.

Congratulations, you have just understood how language models work. They simply make a very informed guess as to what comes next in a certain sequence.

How do they guess this? Well, they train on vast amounts of data. Using that training data, it then statistically figures out a likely next item, and then it repeats that process.

Now, in the above sequence of odd numbers, 10 might not always be the right answer. For example, if your training data includes vast amounts of chants from US cheerleaders, then the next items in a sequence might look like this:

2, 4, 6, 8. Who do we appreciate?

So, it depends on how your model has been trained what it guesses as the next item.

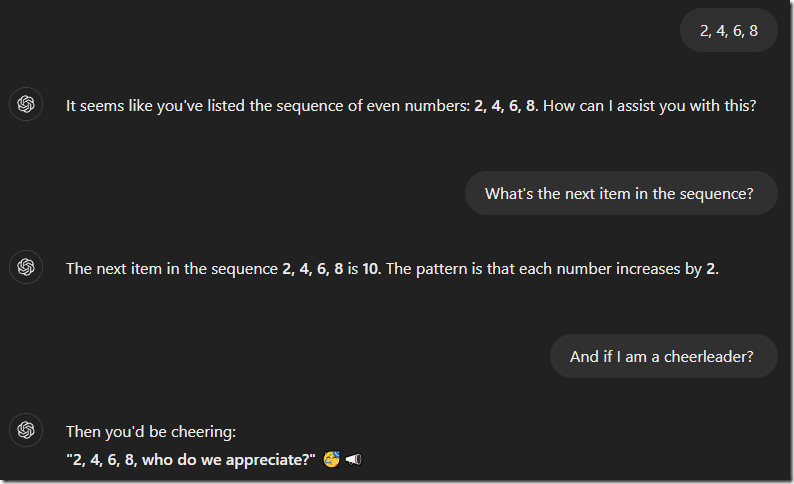

In the above screenshot, I am first just inputting 2,4,6,8 as a sequence, and ChatGPT will ask what I want to do with it.

I then prompt it to tell me the next item in the sequence, and it responds with 10, explaining why that is the next item.

Finally, I ask what the item would be if I’m a cheerleader, and it adds that I would be cheering “Who do we appreciate?” instead.

I am prompt engineering it to give me the answer I want. Figuring out what to say in order to get that answer is what prompt engineering is all about.

The First Problem: Models Change

Note that the above screenshot is from ChatGPT4 on December 9, 2025. Why is this important? Well, as I mentioned, what you get as a result depends on how your model has been trained, and that brings us to our first problem with prompt engineering.

You see, models change, at least mostly, as does the temperature. No, I’m not talking about the weather, but about the randomness built into the way models guess the next item. This is often called temperature. The higher the temperature, the more loosely the model will choose the next item.

For example, let’s say your training data looks like this:

2,4,6,8,10

2,4,6,8,10

2,4,6,8,10

2,4,6,8, who de we appreciate?

Based on this, there’s a 25% chance that the model will pick the cheerleader response.

There are ways to change this behavior, but for simplicity’s sake, let’s assume we just follow statistics.

Normally, a 75% statistical chance is more than enough to pick the ‘correct’ answer but what if someone retrains the model with this data:

2,4,6,8,10

2,4,6,8,10

2,4,6,8,10

2,4,6,8, who de we appreciate?

2,4,6,8, who de we appreciate?

2,4,6,8, who de we appreciate?

Now there’s suddenly a 50/50 chance that either option is chosen.

The prompt you had made earlier now might result in a different outcome because the model itself changes.

This happens more frequently than you think. OpenAI, for example, regularly updates their models with new data but also by adding features to existing models to make them more secure or to avoid litigation. They can retire models or replace them at their whim.

When that happens, all your work engineering the perfect prompt is now wasted. Back to the drawing board.

How To Fix Changing Models?

The short answer to how to avoid this problem is that you can’t, at least not with models you do not control. Technically, you can run your own models but the issue even with that is that if you never change those models, you also lose out on important features.

- You cannot implement security fixes if your model has problems.

- You don’t get newer and better models when those are released

- You cannot retrain your model with new information because that too changes the model

For most users, then, prompt engineering is not going to be a viable approach to working with language models. We are relegated to what most people do in any case, which is to have a conversation with an LLM through web interfaces like ChatGPT.

Then, you just have to hope that OpenAI doesn’t change the way their models work.

Why is this such a big issue? Can’t you just keep that conversation? After all, people change too, and you are still able to have a conversation with your boss or your aunt during the annual family gatherings.

And you would be right about that, if all you want is to chat conversationally with someone.

However, for any type of desired output, you can’t rely on casual conversation. After all, your boss might fire you and your aunt might read crazy news on Facebook and decide that aliens are eating your plants. If you expect a certain predictable outcome, you cannot do so if the model, or the person, is not predictable.

If they are not predictable, you end up having to redo everything you’ve learned about talking with your boss or your aunt or your language model ever time something changes.

And that’s the first reason why you should not rely on prompt engineering.

Summary

In this article, I have explained how prompts work with language models.

I showed you how it really just predicts the next item in a sequence based on statistical probabilities. If those probabilities change, which happens when the model change, the entire prompt has to change too so you are back to redoing everything you have done or invested every time someone changes the model.

Because of this, using prompt engineering to craft the perfect input for a perfect input is a waste of time, if the model is unpredictable and can change.

Feel free to share this article if you found it interesting and useful. I greatly appreciate any comments or feedback too, so let me know if you have something on your mind.