When SharePoint was a big thing, a luminary called Marc D. Anderson coined the idea of the middle tier of SharePoint development in his now famous Middle-Tier Manifesto of SharePoint Development.

In short, Marc divided the development of SharePoint into three distinct tier based on how you would develop solutions, the capabilities you got, and the skills you would need.

In AI, we have something very similar because we are also building solutions.

It can be useful to follow the Middle Tier Manifesto model, and divide our craft into three distinct tiers:

- Tier one: Customizing ready made solutions,

- Tier two: Composing solutions using no-code/low-code platforms, and

- Tier three: Building applications with code.

Each tier comes with its own audience, use cases, and a mix of opportunities and challenges.

Before we begin, however, note that I am talking about application development here, not data or model development. Those are much different from application development and is not included in these three tiers.

Let’s break them down:

Tier 1: Customizing Ready-Made Solutions

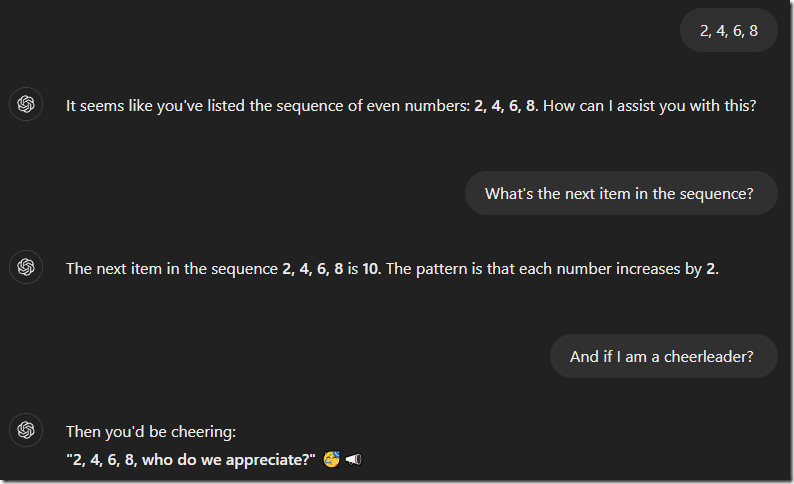

The first tier is defined by utilizing existing tools to create specialized version of those tools. A typical example is creating custom GPTs with ChatGPT.

The interaction here is largely natural language, although you might find settings pages to tweak certain details. In other words, you describe by prompting what you want created.

This is the domain of prompt engineers and where you will find most non-technical users. It is the easiest and fastest way to get started but heed the dangers below before you get too excited.

Audience: General users, non-technical professionals, and those looking to streamline workflows without learning technical skills.

Typical Uses: Chatbots, content generation, summarization, brainstorming, and automating repetitive tasks.

Tools like ChatGPT or custom GPTs, as well as LLMs with system prompts, allow users to integrate AI into their daily routines without needing deep expertise.

Solutions are, however, mostly simple and do not require or depend on complex needs, like scaling, performance, migration, custom authentication, and so on.

A common task might be to customize the system prompts or instructions for a chatbot, if more complex methods of customization are not available.

Benefits:

-

Accessibility: No technical knowledge is required. Anyone can begin here and create quick solutions for simple problems.

-

Speed: Solutions are ready out of the box.

-

Flexibility: Tools can adapt to a variety of use cases.

Dangers:

-

Over-reliance: Users may adopt AI outputs without critical evaluation.

-

Lack of depth: Tools are powerful but limited by their generalist nature.

-

Limited control: You can largely only create what you can prompt and capabilities are limited to what the platform offers

Examples:

- ChatGPT and custom GPTs

- Claude with custom system prompts

- Gemini with custom system prompts

In short, use the first tier when you don’t want to invest in learning more advanced methods and you just want to get started or even solve very simple problems that do not require a full solution.

Tier 2: Composing Solutions Using No-Code/Low-Code Platforms

This is the tier that Marc would call Middle Tier, and it is here that we start requiring some specific skills. No longer can we just talk with our chosen platform but we need to learn specific skills of that platform and its capabilities.

In return, we gain significant advantages over the first tier in that we can create vastly more complex solutions and have much more control.

Typically, the second tier includes utilizing a specific application or platform. This might be drag-and-drop composition like in Flowise, dropdown creation like Agent.ai, or anything else, but the core functionality is reusing and customizing existing components and composing functionality from that.

Audience: Entrepreneurs, small businesses, and tech-savvy professionals seeking bespoke solutions without deep coding expertise.

Typical Uses: Creating custom chatbots, workflow automations, predictive models, and simple AI-driven apps.

Platforms and apps like Flowise, Bubble, Zapier, or Make empower users to design tailored AI experiences.

Benefits:

-

Empowerment: Users can create solutions that better match their specific needs.

-

Scalability: Intermediate complexity is achievable without a full development team.

-

Faster Deployment: Projects take days or weeks rather than months.

Dangers:

-

Hidden limitations: Platforms may cap functionality or scalability.

-

Dependency risks: Reliance on proprietary platforms can lead to vendor lock-in.

-

Security gaps: Misconfigurations can expose vulnerabilities.

Examples:

In short, use the second tier of AI development when you need more power and control and are willing to learn a platform and commit to it.

Tier 3: Building Applications with Code

Finally, the third tier of AI development is where we fire up Visual Studio or VSCode and we care about things like variables and can say words like Python and Tensorflow and mean it!

The third tier offers the most control, the most power, and the most danger. This is where you can fully control every nuance of your project and you can create complex and powerful solution.

However, you also need to know how code works. I know, I know, you think you can just ask an AI to write the code for you, but that is a dangerous simplification. Using an AI to write code still requires you to know what that code does so you can modify, monitor, and debug it.

Audience: Developers, data scientists, and organizations with resources to invest in custom AI solutions.

Typical Uses: Advanced applications like AI-powered SaaS, industry-specific automation, and deeply integrated systems. Building with frameworks (like TensorFlow or PyTorch) and programming languages enables unparalleled customization and control.

Benefits:

-

Infinite Customization: Tailored solutions with no platform constraints.

-

Performance: Applications can be optimized for specific use cases.

-

Ownership: Full control over the stack reduces dependency on third-party services.

Dangers:

-

Resource Intensive: Requires expertise, time, and budget. Yes, even with ChatGPT as your copilot.

-

Complexity: Maintaining and scaling applications demands ongoing effort.

-

Ethical Risks: Higher risk of deploying unintended biases or security flaws.

Examples:

- Python

- C#

- JavaScript

- PyTorch

In short, when you really need to control every detail and you want all the power you can get, go with the third tier of development. Do not mistake this power for ease; you still must learn and know how to write code to work here safely.

Conclusion

And there you have it – the three tiers of AI application development.

Make sure that as you begin, or when you select an approach, that you understand the benefits and dangers of each tier. Make sure you don’t start at the most complex solutions when all you want is to get some ideas for a birthday card, and similarly, that you don’t try to prompt engineer yourself into what should be done in the third tier.

What are your thoughts? Let me know in the comments below.